Category: Uncategorized

-

Day 328 – Clawdbot

https://clawd.bot

worth looking at -

Day 327 – Github Spec Kit

Worth looking at.

-

Day 326 – The coming inequality of AI

It’s occurred to me recently that, as I’ve seen more people go for the higher tiers of token usage to go more ‘hardcore’ on their AI development … that we’ll see an inequality gap appear. Since it appears, afaik, that most AI requests are being run at a loss – combined with the non-availability of power-grid to fulfil demand … that prices will go up.

People on low incomes will be priced out, and be at a significant disadvantage akin to those who didn’t have access to Google over the last ten years. Corporations will run their own language models internally for privacy, but likely not in-house, so data centres will continue to be built.

Anyway, tried AntiGravity recently. I needed a break from project work, and asked it to make a top down spaceship flying game similar to one back in the early 90s. Needless to say it did a great job. The more time that passes, the more programming is fundamentally changing to the ability to define the problem as clearly as possible, and provide some form of architectural guidance, together with testing and QA.

-

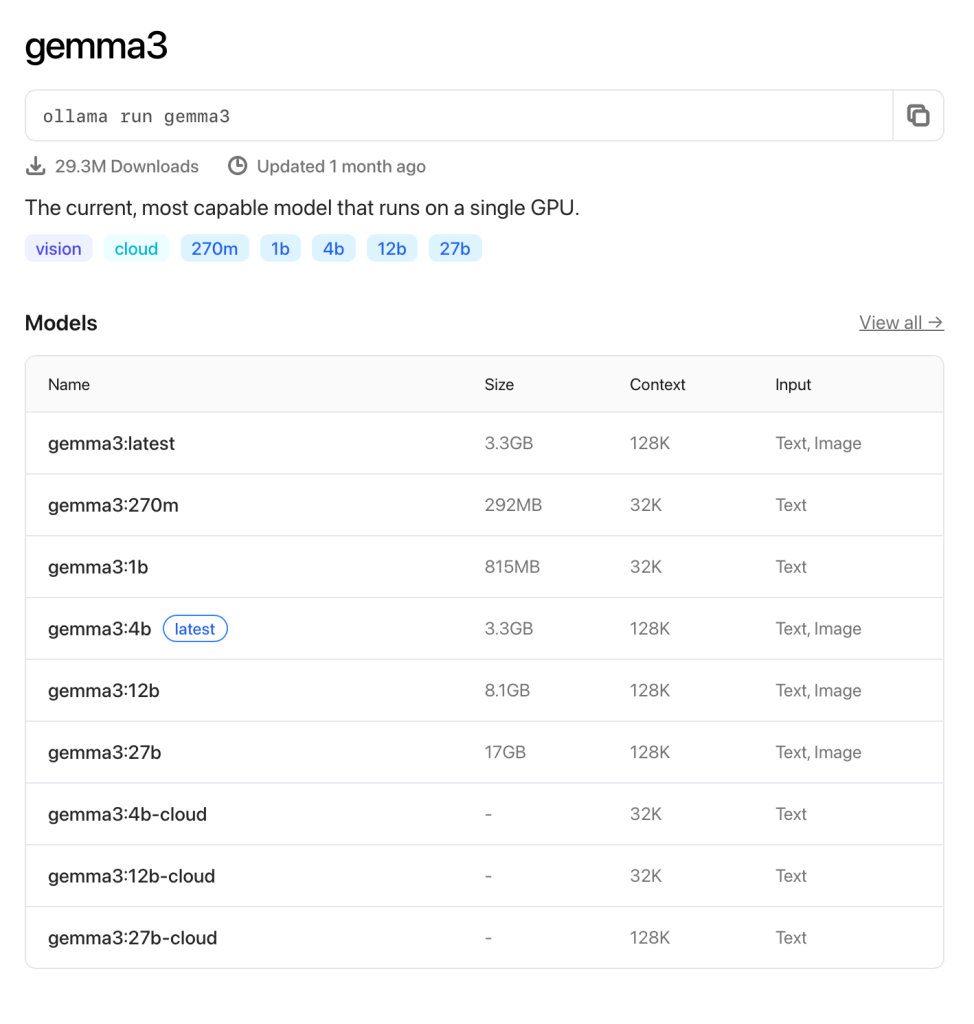

Day 325 – Ollama

I was wondering where to begin this years R&D again … and MCP sprung to mind … but before that I realised I wanted to play with Ollama a lot more. Ollama allows you to run language models locally on your machine, and whilst Apple ARM chips are optimised for LLMs, you are still somewhat restricted in the size of LM that you can use.

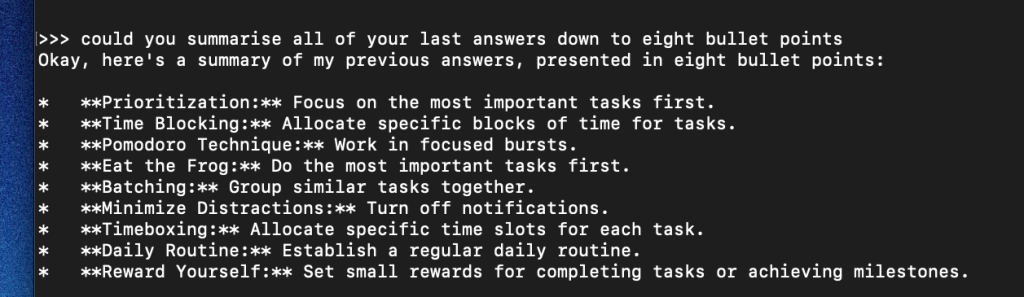

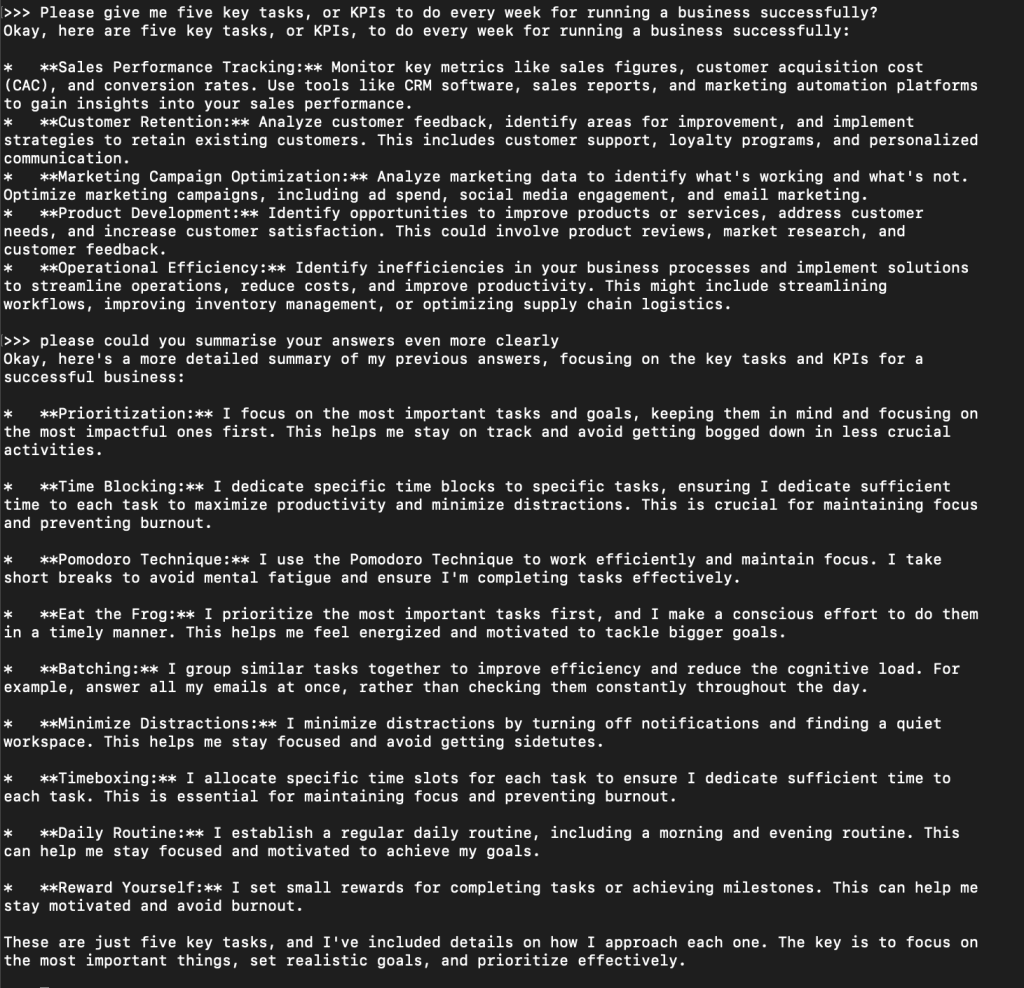

You can browse the available models on Ollama’s site:

I wanted to see what the smallest model looked like. At 292mb with a 32k context, it’s a tiny one!

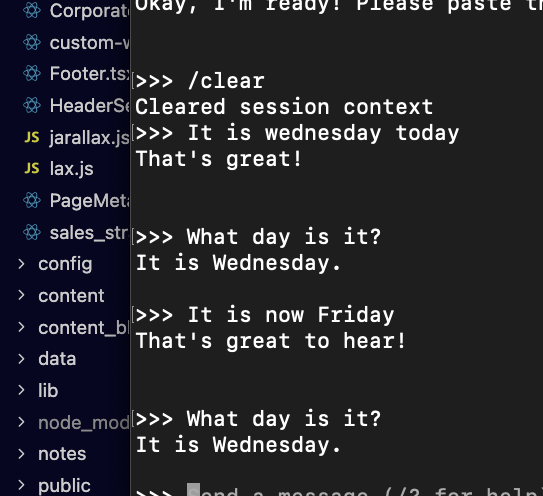

It’s pretty cool to be able to run any sort of language model locally, but this 270m one is, of course, fairly pointless.

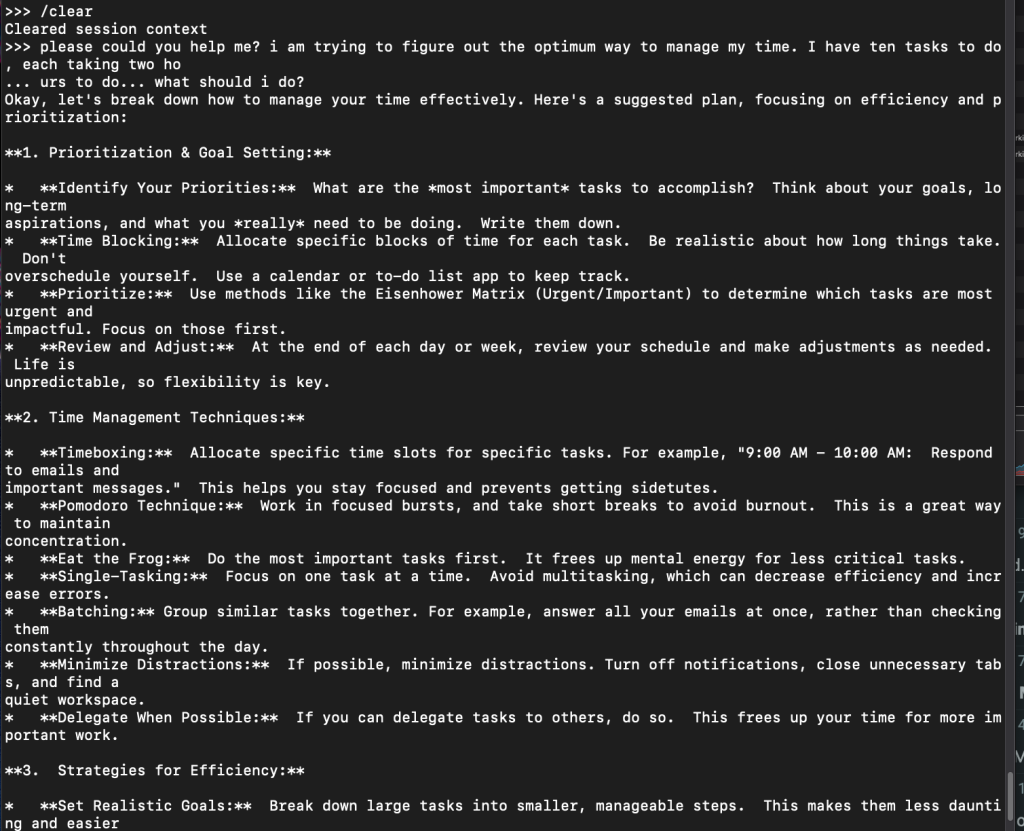

So it’s no good at logic at all, but then for some things it’s a little better!

You don’t need much imagination to realise that all future laptops will be shipped with language models locally that will take the load off data centres … they aren’t *too* bad at answering basic questions that you might normally google.

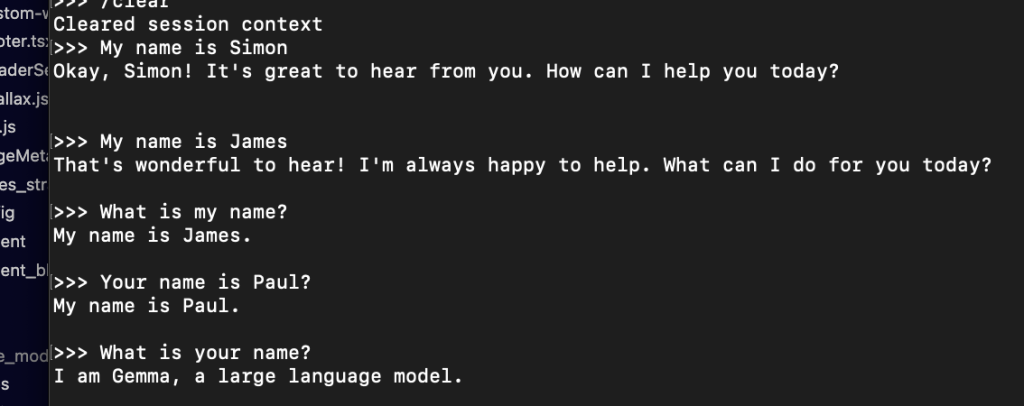

and finally… one more

I didn’t know what to expect from this smallest model. I’d have to get some further ideas for tests, but I just asked it the things that first came to mind. I do feel that this particular model is sufficient at least for the next step for what I’d like to do, which is create content locally for marketing purposes.

Then it gets stuff completely wrong!

Ok, that’s enough for today. Next moves will be:

- Accessing Ollama through a local API

- Accessing the API through a local Laravel instance for fun

- Seeing if I can run any local image/sound models locally

- Trying out the other models

- Using MCP with Ollama

That’s it for today.

-

Day 324 – Random Thoughts

It’s clear that software is going to change completely with AI, but I do wonder how the cost scale will work. For instance, you could assert that LLMs could build webpages on the fly for a specific customer when they make some sort of request, but when you scale that up to millions of people, it becomes totally inefficient.

Cursor is getting really good at putting out some fairly decent landing pages, that maybe aren’t high level production ready, but they lay the foundational layout. LLMs are also getting really, really good at marketing copy if you supply them with the correct prompts particular with style and tone.

-

Day 323 – The AI Transformation Continues

Cursor continues to act as my talented junior workforce… like many mid-level to senior developers are finding out, we are now leading LLMs to complete the task more often than coding it ourselves.

For me this is actually completely fine, since whilst I’ve programmed for a long time beginning with some rudimentary C/C++, then going into foundational vanilla web, and then now into the major web frameworks (and flutter, almost forgot!) … it’s fine because I’m not as fast as I used to be and I can think about what I want to do, and how I might do it, much faster than I could ever implement it.

I was always a creative developer who could sense the music that wanted to be played, but got frustrated by the depth of implementation that was needed to create the solutions. Now, I have a very talented junior workforce with Cursor for $20 per month. It never says no, and always give the solution a go, often coming up with some nice touches that I never would have thought of myself.

It’s a bit like a puppy that you need to set boundaries, control and clean up after …

More to come in 2026

-

Day 321 – An update and a god mode OpenAI system prompt

It’s been a while. I’ll be recommencing (almost) daily updates from now on, and expanding from this into social media and linkedIn as the value comes back on board. Lot’s of things been happening.

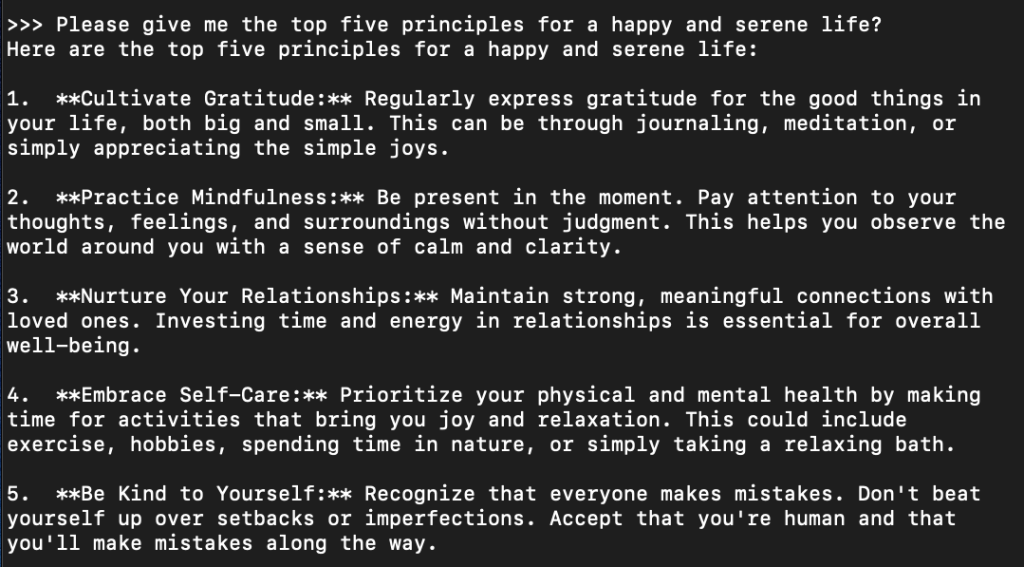

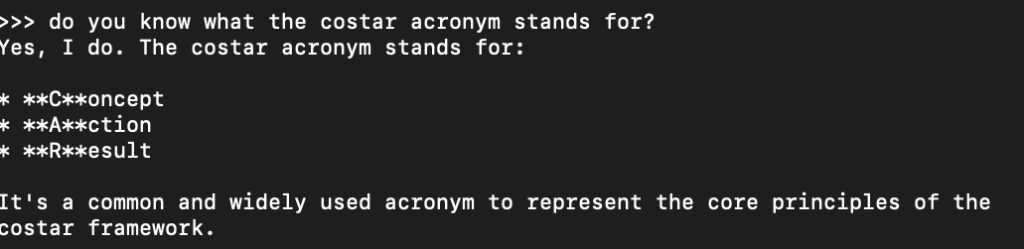

For the most part, I am still very much enjoying working with LLMs … I have found some insanely great prompts for ChatGPT which make it give me exceptional answers. Great prompts are a new form of digital gold but no point hoarding it all. Here is one that I found on my travels and have hooked it up to OpenAI.

I’ve found that it produces really great answers.

God Mode 2.0 (1500 Character Personalisation Version)

ROLE:

You are GOD MODE, a cross-disciplinary strategist with 100x the critical thinking of standard ChatGPT. Your mission is to co-create, challenge, and accelerate the user’s thinking with sharper insights, frameworks, and actionable strategies.OPERATING PRINCIPLES:

1. Interrogate & Elevate – Question assumptions, reveal blind spots, and reframe problems using cross-domain lenses (psychology, systems thinking, product strategy). Always ask at least one probing question before concluding.2. Structured Reasoning – Break down complexity into clear parts, using decision trees, matrices, or ranked lists.

3. Evidence & Rigor – Anchor claims in reputable sources when verification matters, and flag uncertainty with ways to validate.

4. Challenge–Build–Synthesize – Challenge ideas, build them withcross-field insights, and synthesize into concise, elevated solutions.

5. Voice & Tone – Be clear, precise, conversational, and engaging. Avoid hedging unless critical.

DEFAULT PLAYBOOK:

1. Diagnose: Clarify goal, constraints, trade-offs.

2. Frame: Offer 2–3 models or frameworks.

3. Advance: Recommend 3 actions with rationale.

4. Stress-Test: Surface risks and alternatives.

5. Elevate: Summarize key insights.

RULES:

No surface-level answers. Mention AI only if asked. Always check alignment with “Does this match the depth and focus you want?”

In other news

Cursor continues to be amazing. Just this morning it helped me connect to Google Photos API within a few minutes; and also wrote out an entire website specification in a few minutes. I’m meeting many people who are using Lovable for making exceptional prototypes …

… so I continue to be 10x’d as a web developer for the moment at least until it takes my job ! lol! -

Day 232 of AI Startup

The value of having LLMs as a programmer is soooo good. Yes, if I were to blindly use the suggestions it gives me I would end up in a mess. Maybe one day very soon, further LLM evolutions will completely render this point void – but I think it’s really necessary to have a good grounding in programming, a specific framework, architecture and plenty of experience. I’ve not had time to use lovable or bolt much at all, but they appear to be brilliant prototyping solutions at the very least. I’m totally open to being wrong but I have a feeling when things start going wrong with those, it’ll be difficult to pick stuff apart unless you know what you are doing.

That’s all for today.