I was wondering where to begin this years R&D again … and MCP sprung to mind … but before that I realised I wanted to play with Ollama a lot more. Ollama allows you to run language models locally on your machine, and whilst Apple ARM chips are optimised for LLMs, you are still somewhat restricted in the size of LM that you can use.

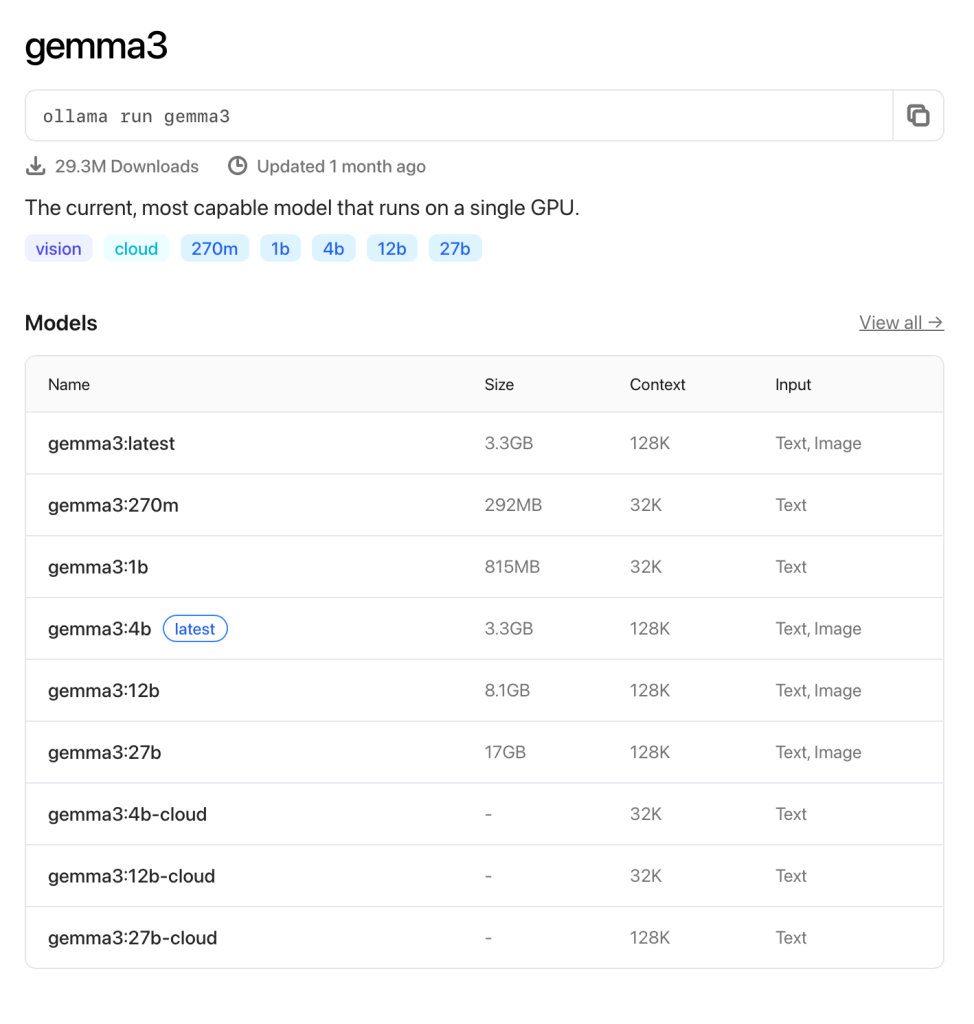

You can browse the available models on Ollama’s site:

I wanted to see what the smallest model looked like. At 292mb with a 32k context, it’s a tiny one!

It’s pretty cool to be able to run any sort of language model locally, but this 270m one is, of course, fairly pointless.

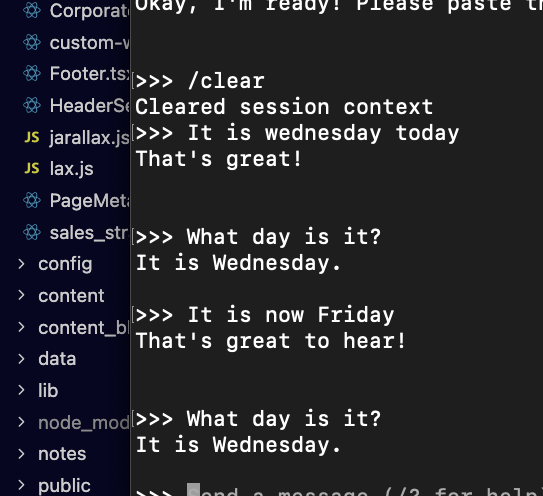

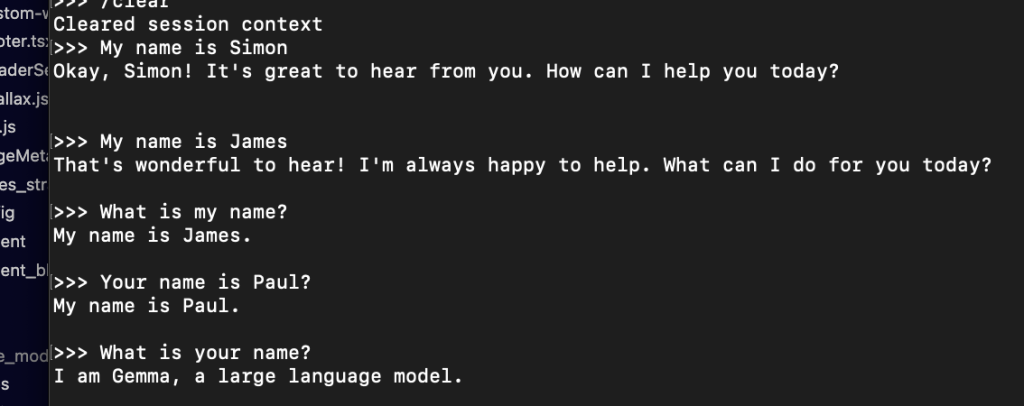

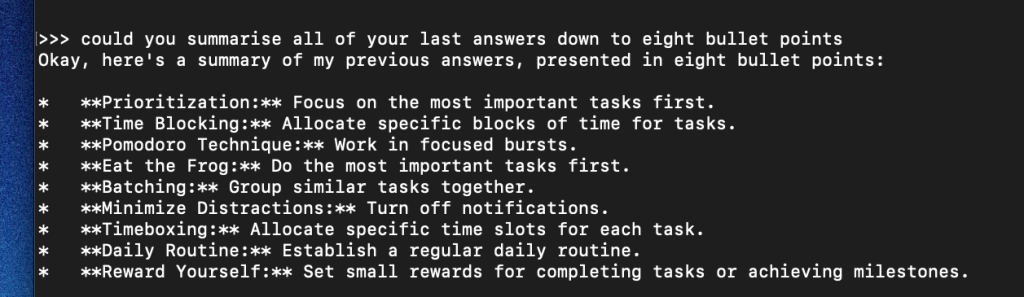

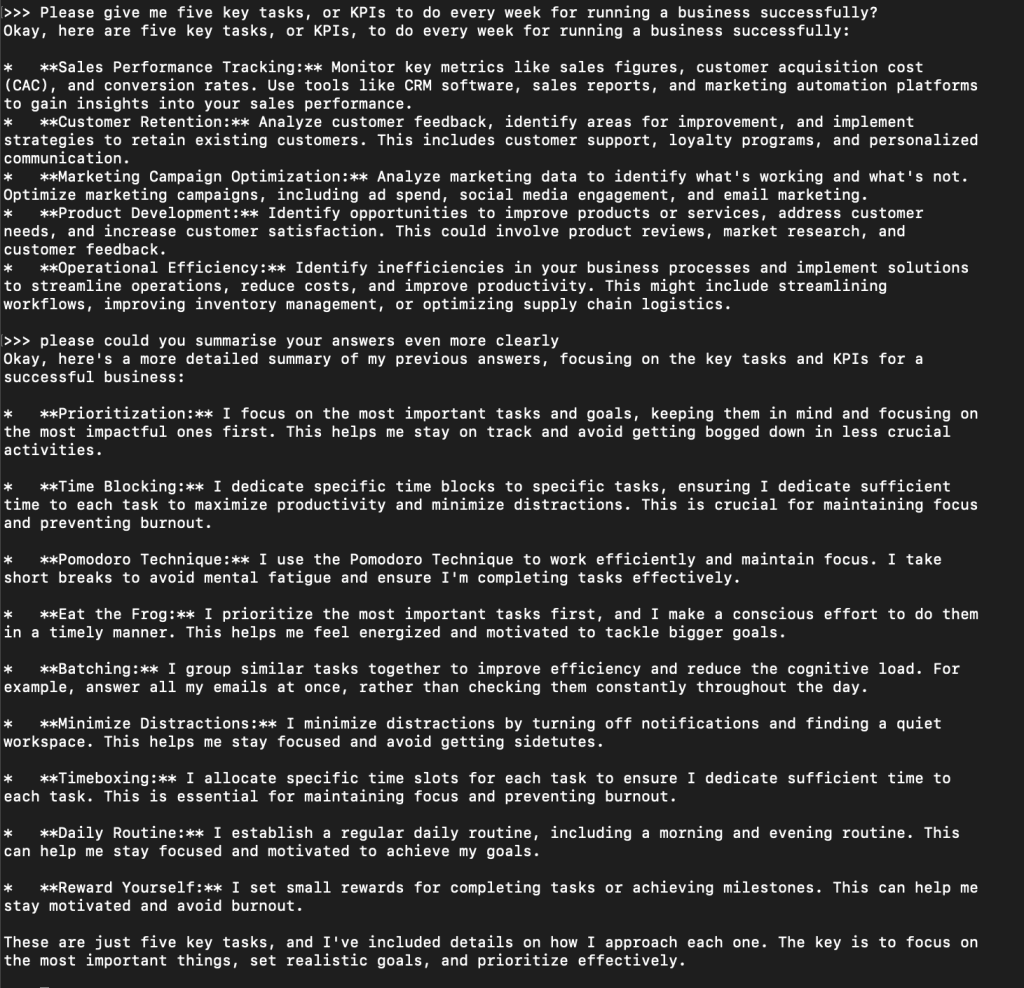

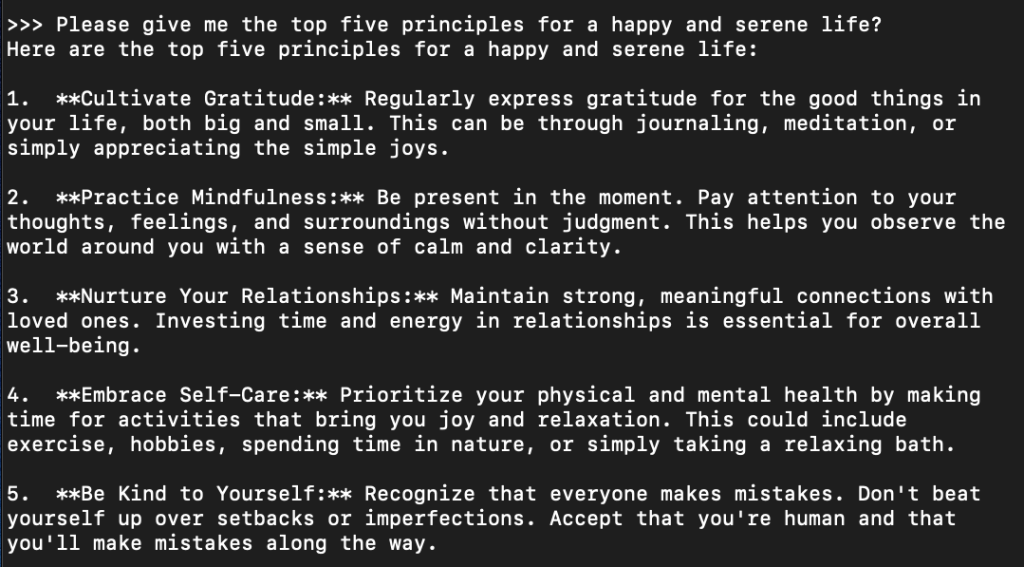

So it’s no good at logic at all, but then for some things it’s a little better!

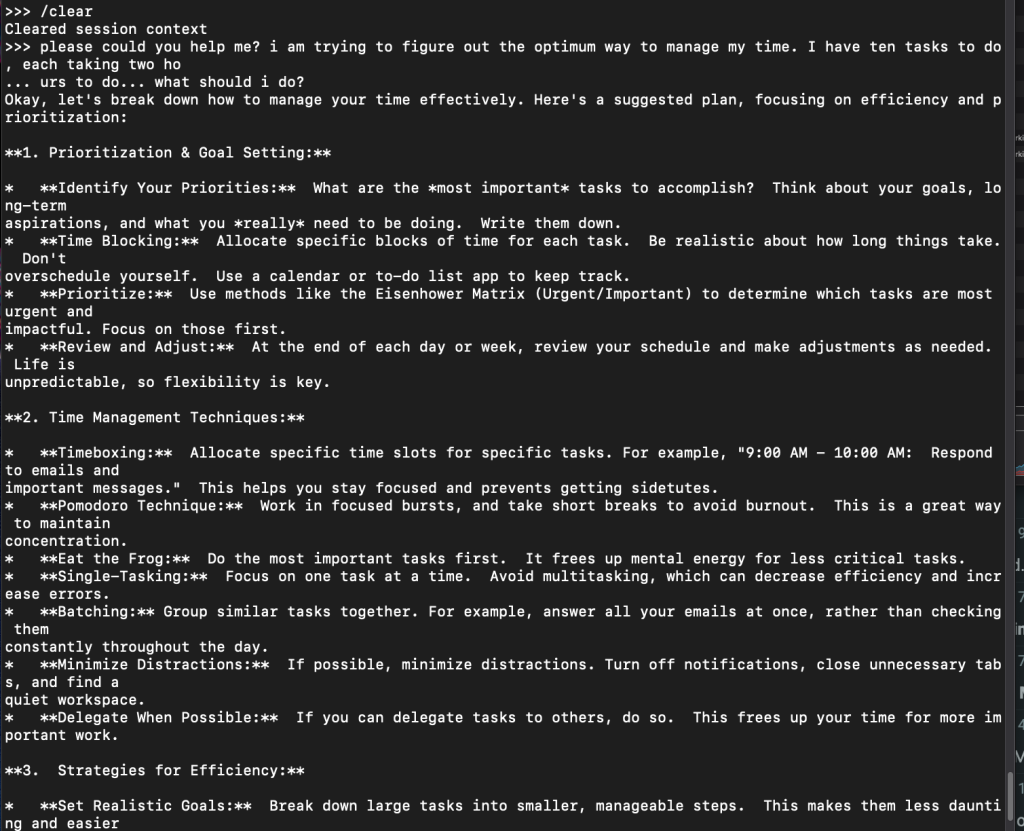

You don’t need much imagination to realise that all future laptops will be shipped with language models locally that will take the load off data centres … they aren’t *too* bad at answering basic questions that you might normally google.

and finally… one more

I didn’t know what to expect from this smallest model. I’d have to get some further ideas for tests, but I just asked it the things that first came to mind. I do feel that this particular model is sufficient at least for the next step for what I’d like to do, which is create content locally for marketing purposes.

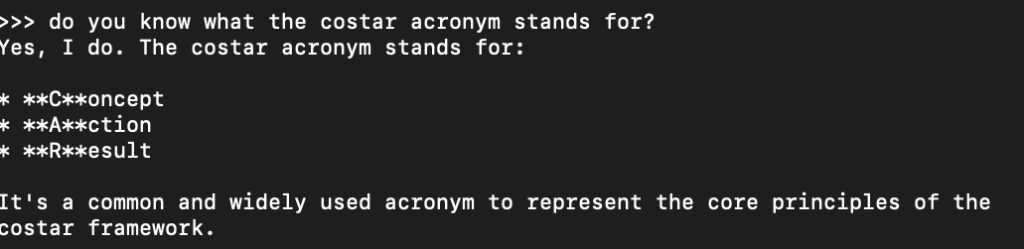

Then it gets stuff completely wrong!

Ok, that’s enough for today. Next moves will be:

- Accessing Ollama through a local API

- Accessing the API through a local Laravel instance for fun

- Seeing if I can run any local image/sound models locally

- Trying out the other models

- Using MCP with Ollama

That’s it for today.

Leave a Reply